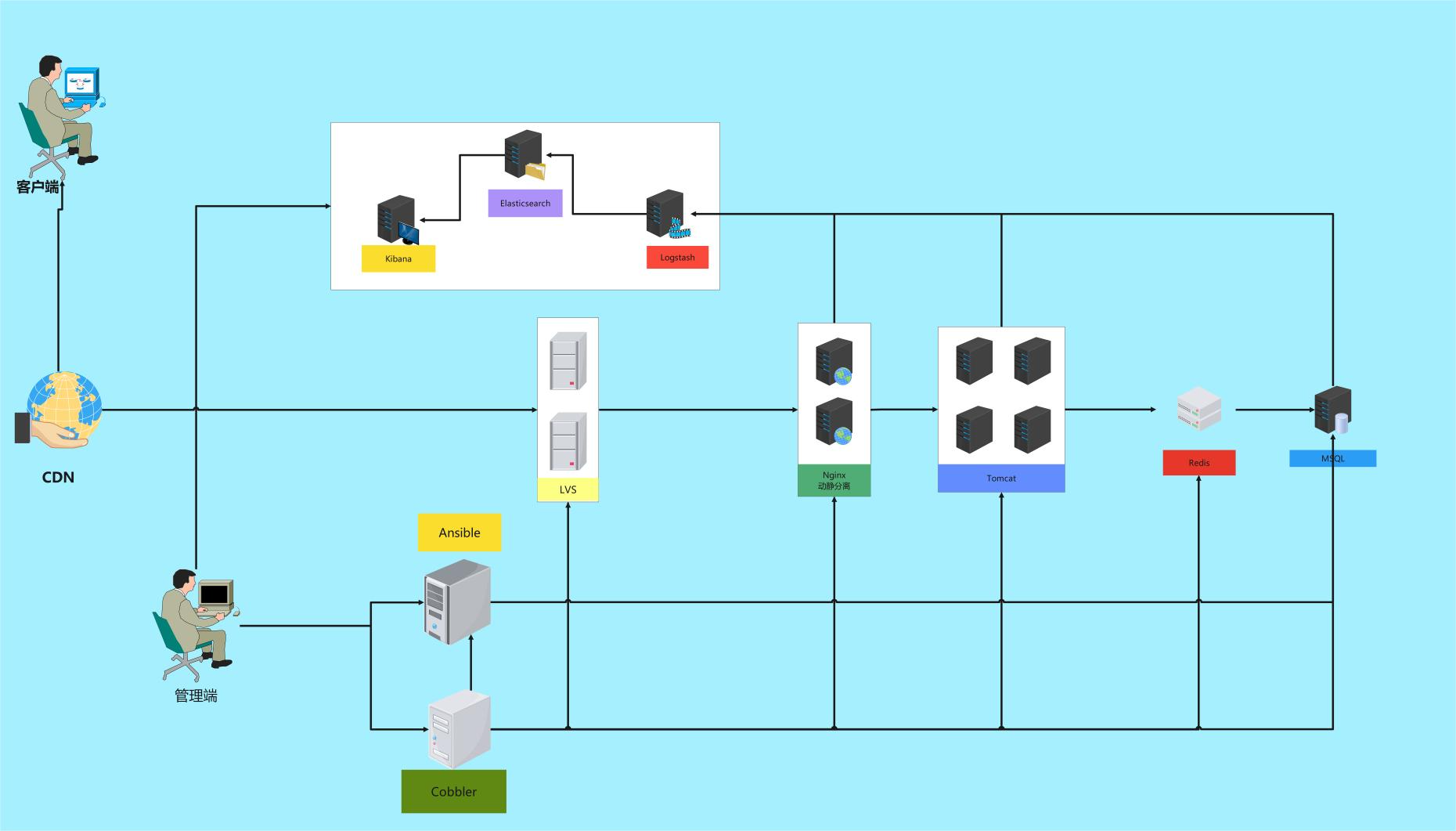

Ansible-部署电商网站

1、假设你是一个公司的系统运维人员,公司的业务类型是电商网站业务,为了应对双11的用户的访问压力,因此想升级公司IT架构采购了一批新的服务器,要求在最短的时间上线测试并投入使用,服务器的类型中有2台LVS的负载均衡器,2台服务器用于承担用户的访问压力,4台服务器用于承担动态页面的请求,要求后端服务器解决session不一致问题,保证当 用户不管访问到哪一台服务器session都是一致的,后端数据库服务器采用的是Mysql,并要求数据库每2个小时要自动备份一次数据。

2、为了确保部署的效率,要求部署一个ansible主机,以便运维人员对后端的服务器的统一管理,比如配置文件的推送,服务的管理等(要求使用ansible实现)

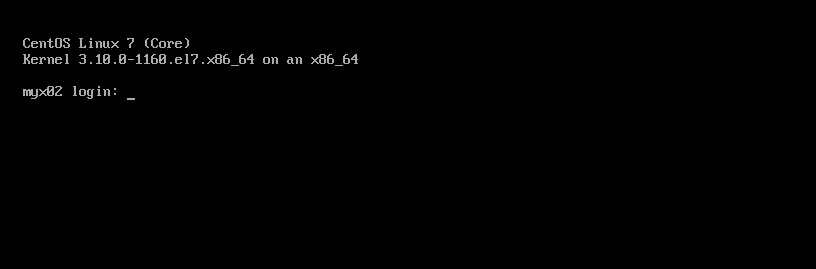

3、新服务器没有安装任何的操作系统,需要你根据需求完成服务器的操作系统的批量安装

4、为了实时查看服务器的日志数据,要求上线一套日志管理系统,以便可以实时查看日志,日志包含网站日志和数据库的日志

| 名称 | IP | 作用 |

|---|---|---|

| Cobbler | 10.0.0.8 | 批量装机 |

| Ansible | 10.0.0.9 | 管理主机 |

| LVS-1 | 10.0.0.10 | 负载均衡 |

| LVS-2 | 10.0.0.11 | 负载均衡 |

| Nginx-1,Logstash | 10.0.0.12 | 动静分离 |

| Nginx-2,Logstash | 10.0.0.13 | 动静分离 |

| Tomcat-1,Logstash | 10.0.0.14 | 处理动态资源 |

| Tomcat-2,Logstash | 10.0.0.15 | 处理动态资源 |

| Tomcat-3,Logstash | 10.0.0.16 | 处理动态资源 |

| Tomcat-4,Logstash | 10.0.0.17 | 处理动态资源 |

| Redis | 10.0.0.18 | session一致 |

| MySQL,Logstash | 10.0.0.19 | 数据存储 |

| Elasticsearch | 10.0.0.20 | 日志存储 |

| Kibana | 10.0.0.21 | 日志搜索、可视化 |

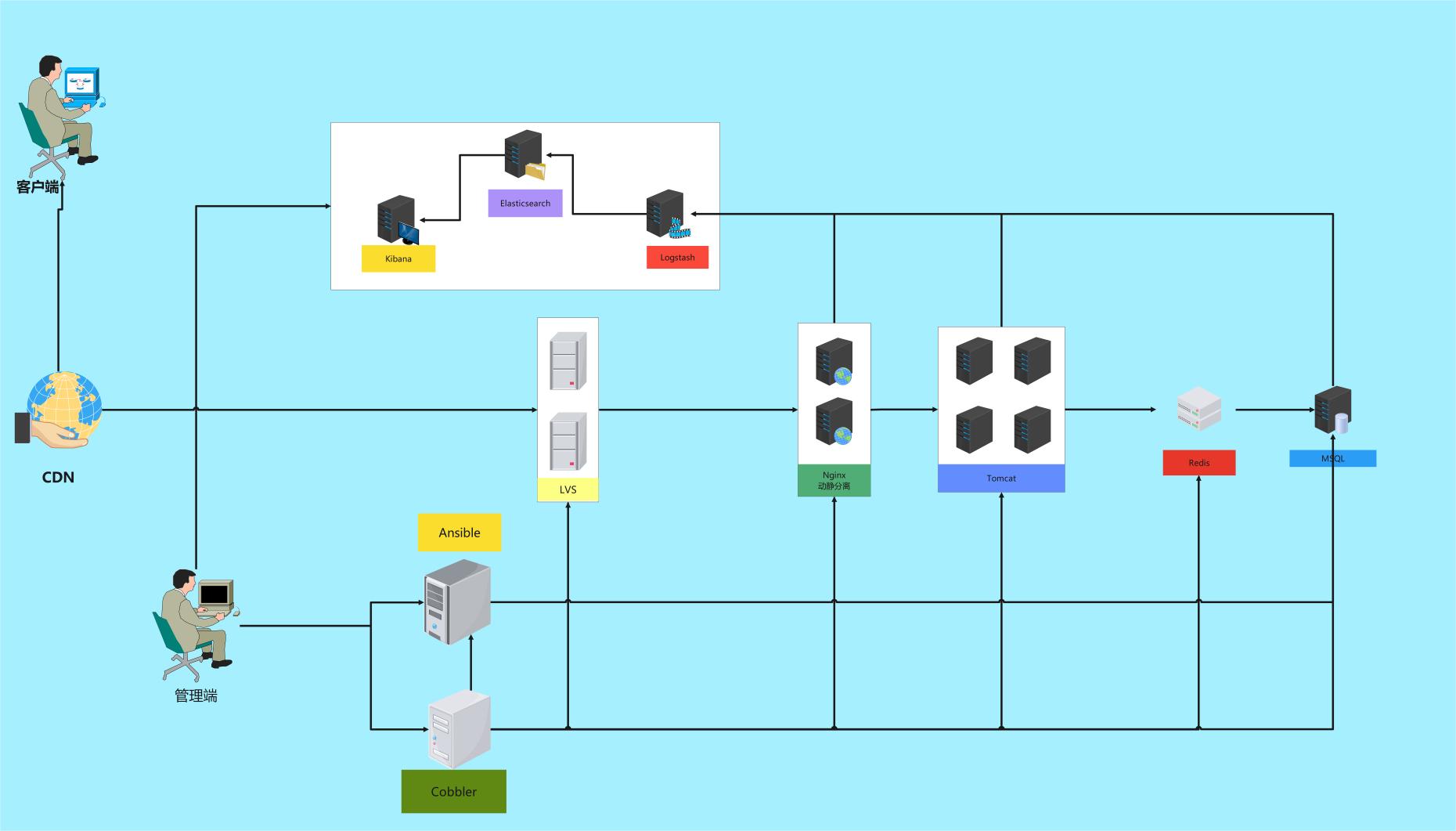

Cobbler 批量装机

# 安装依赖

[root@cobbler~]$ yum -y install epel-release

# 安装Cobbler和相关包

[root@cobbler~]$ yum install -y httpd dhcp tftp python-ctypes cobbler xinetd cobbler-web pykickstart

# 启动服务

[root@cobbler~]$ systemctl enable --now cobblerd

[root@cobbler~]$ systemctl enable --now httpd

# 修改配置文件

[root@cobbler/var/lib/cobbler/kickstarts]$ vim /etc/cobbler/settings

server: 10.0.0.8

next_server: 10.0.0.8

manage_dhcp: 1 #使用cobbler管理DHCP

manage_rsync: 1 #使用cobbler来管理rsync

# 开启TFTP

[root@cobbler~]$ vim /etc/xinetd.d/tftp

disable = no # 修改为 no

[root@cobbler~]$ systemctl restart xinetd

[root@cobbler~]$ systemctl enable xinetd

# 下载cobbler相关系统启动引导文件

[root@cobbler~]$ cp -r /usr/share/syslinux/* /var/lib/cobbler/loaders/

# 开启rsync服务

[root@cobbler~]$ systemctl enable --now rsyncd

# 修改默认密码

[root@cobbler~]$ openssl passwd -1 -salt "$RANDOM" '123456'

$1$12163$nupRUhAqnPp7tk7JSScf51

[root@cobbler~]$ vim /etc/cobbler/settings

default_password_crypted: "$1$12163$nupRUhAqnPp7tk7JSScf51"

# 配置DHCP

[root@cobbler ~]$ vim /etc/cobbler/dhcp.template

ddns-update-style interim;

allow booting;

allow bootp;

ignore client-updates;

set vendorclass = option vendor-class-identifier;

option pxe-system-type code 93 = unsigned integer 16;

subnet 10.0.0.0 netmask 255.255.255.0 { # 指定当前IP地址段

option routers 10.0.0.1; # 指定网关

option domain-name-servers 10.0.0.1;

option subnet-mask 255.255.255.0;

range dynamic-bootp 10.0.0.200 10.0.0.254; # 分配IP地址段

default-lease-time 21600;

max-lease-time 43200;

next-server $next_server;

# 重启Cobbler

[root@cobbler ~]$ systemctl restart cobblerd

[root@cobbler ~]$ cobbler sync

# 挂载镜像

[root@cobbler~]$ mount /dev/cdrom /mnt

mount: /dev/sr0 写保护,将以只读方式挂载

# 导入镜像

[root@cobbler/mnt]$ cobbler import --path=/mnt --name=centos-7 --arch=x86_64

task started: 2024-01-13_153552_import

task started (id=Media import, time=Sat Jan 13 15:35:52 2024)

Found a candidate signature: breed=suse, version=opensuse15.0

Found a candidate signature: breed=suse, version=opensuse15.1

Found a candidate signature: breed=redhat, version=rhel6

Found a candidate signature: breed=redhat, version=rhel7

Found a matching signature: breed=redhat, version=rhel7

Adding distros from path /var/www/cobbler/ks_mirror/centos-7-x86_64:

creating new distro: centos-7-x86_64

trying symlink: /var/www/cobbler/ks_mirror/centos-7-x86_64 -> /var/www/cobbler/links/centos-7-x86_64

creating new profile: centos-7-x86_64

associating repos

checking for rsync repo(s)

checking for rhn repo(s)

checking for yum repo(s)

starting descent into /var/www/cobbler/ks_mirror/centos-7-x86_64 for centos-7-x86_64

processing repo at : /var/www/cobbler/ks_mirror/centos-7-x86_64

need to process repo/comps: /var/www/cobbler/ks_mirror/centos-7-x86_64

looking for /var/www/cobbler/ks_mirror/centos-7-x86_64/repodata/*comps*.xml

Keeping repodata as-is :/var/www/cobbler/ks_mirror/centos-7-x86_64/repodata

*** TASK COMPLETE ***

# 查看镜像列表

[root@cobbler//]$ cobbler list

distros:

centos-7-x86_64

profiles:

centos-7-x86_64

systems:

repos:

images:

mgmtclasses:

packages:

files:

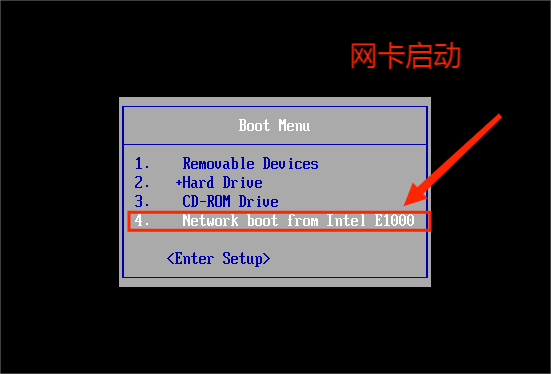

# 修改启动标题提示信息

[root@cobbler~]$ cat /etc/cobbler/pxe/pxedefault.template

DEFAULT menu

PROMPT 0

MENU TITLE Cobbler | https://blog.mengyunxi.cn

TIMEOUT 200

TOTALTIMEOUT 6000

ONTIMEOUT $pxe_timeout_profile

LABEL local

MENU LABEL (local)

MENU DEFAULT

LOCALBOOT -1

$pxe_menu_items

MENU end

# 同步配置

[root@cobbler~]$ cobbler sync

# 所有机器使用网卡启动

Ansible

# 安装ansible

[root@ansible~]$ yum install ansible -y

# 开启一些优化项

[root@ansible~]$ vim /etc/ansible/ansible.cfg

record_host_keys=False

log_path = /var/log/ansible.log

module_name = shell

# 编写主机清单

[root@ansible~]$ vim /etc/ansible/hosts

[lvs]

10.0.0.10

10.0.0.11

[logstash]

10.0.0.12

10.0.0.13

10.0.0.14

10.0.0.15

10.0.0.16

10.0.0.17

10.0.0.18

[nginx]

10.0.0.12

10.0.0.13

[tomcat]

10.0.0.14

10.0.0.15

10.0.0.16

10.0.0.17

[redis]

10.0.0.18

[mysql]

10.0.0.19

[elasticsearch]

10.0.0.20

[kibana]

10.0.0.21

LVS + keepalive

[root@ansible/data/lvs]$ vim lvs.yml

- hosts: lvs

remote_user: root

gather_facts: no

tasks:

- name: copy ifcfg-ens33

copy: src=/data/ifcfg-ens33 dest=/etc/sysconfig/network-scripts/ mode=644

- name: copy ifcfg-lo:0

copy: src=/data/ifcfg-lo:0 dest=/etc/sysconfig/network-scripts/ mode=644

- name: modifi sysctl.conf

shell: echo net.ipv4.ip_forward=1 >> /etc/sysctl.conf && sysctl -p

- name: Install keepalived ipvsadm

yum: name=ipvsadm state=present

- name: lvs

shell: ipvsadm -A -t 10.0.0.100:80 -s rr persistent 50 && ipvsadm -a -t 10.0.0.100:80 -r 10.0.0.12 -g && ipvsadm -a -t 100.0.100:80 -r 10.0.0.13 -g && ipvsadm -S >/etc/sysconfig/ipvsadm

- name: start ipvsadm

service: name=ipvsadm state=started enabled=yes

[root@ansible/data/nginx]$ cat nginx.yml

---

- hosts: nginx

remote_user: root

gather_facts: no

tasks:

- name: add group nginx

tags: user

user: name=nginx state=present

- name: add user nginx

user: name=nginx state=present group=nginx

- name: Install keepalived Nginx

yum: name={{ item }} state=present

with_items:

- keepalived

- nginx

yum: name=nginx state=present

- name: config

copy: src=/data/nginx/nginx.conf dest=/etc/nginx/nginx.conf

notify:

- Restart Nginx

- Check Nginx Process

- name: ifcfg

- copy: src=ifcfg-lo:0 dest=/etc/sysconfig/network-scripts/

notify:

- Restart Network

- name: modifi sysctl

shell: echo -e "net.ipv4.conf.all.arp_ignore = 1\nnet.ipv4.conf.all.arp_announce = 2\nnet.ipv4.conf.lo.arp_ignore = 1\nnet.ipv4.conf.lo.arp_announce = 2" > /etc/sysctl.conf && sysctl -p

handlers:

- name: Restart Nginx

service: name=nginx state=restarted enabled=yes

- name: Check Nginx process

shell: killall -0 nginx > /tmp/nginx.log

- name: Restart Network

service: name=network state=restarted

# ifcfg-lo:0

DEVICE=lo:0

IPADDR=10.0.0.100

NETMASK=255.255.255.255

# If you're having problems with gated making 127.0.0.0/8 a martian,

# you can change this to something else (255.255.255.255, for example)

ONBOOT=yes

NAME=loopback

# keepalive-Master

! Configuration File for keepalived

global_defs {

notification_email {

acassen@firewall.loc

failover@firewall.loc

sysadmin@firewall.loc

}

notification_email_from Alexandre.Cassen@firewall.loc

smtp_server 10.0.0.10

smtp_connect_timeout 30

router_id LVS_DEVEL

vrrp_skip_check_adv_addr

}

vrrp_instance VI_1

{

state MASTER

interface ens33

virtual_router_id 66

priority 100

advert_int 1

authentication {

auth_type PASS

auth_pass 1111

}

virtual_ipaddress {

10.0.0.10

}

}

virtual_server 10.0.0.100 80 {

delay_loop 6

lb_algo wrr

lb_kind DR

persistence_timeout 50

protocol TCP

real_server 10.0.0.12 80 {

weight 1

TCP_CHECK {

connect_timeout 10

nb_get_retry 3

delay_before_retry 3

connect_port 80

}

}

real_server 10.0.0.13 80 {

weight 1

TCP_CHECK {

connect_timeout 10 #连接超时时间

nb_get_retry 3 #重连次数

delay_before_retry 3 #重连间隔时间

connect_port 80 #健康检查的端口

}

}

}

# keepalive-Backup

! Configuration File for keepalived

global_defs {

notification_email {

acassen@firewall.loc

failover@firewall.loc

sysadmin@firewall.loc

}

notification_email_from Alexandre.Cassen@firewall.loc

smtp_server 10.0.0.11

smtp_connect_timeout 30

router_id LVS_DEVEL

vrrp_skip_check_adv_addr

}

vrrp_instance VI_1 {

state BACKUP

interface ens33

virtual_router_id 66

priority 90

advert_int 1

authentication {

auth_type PASS

auth_pass 1111

}

virtual_ipaddress {

10.0.0.10

}

}

virtual_server 10.0.0.100 80 {

delay_loop 6

lb_algo rr

lb_kind DR

persistence_timeout 50

protocol TCP

real_server 10.0.0.12 80 {

weight 1

TCP_CHECK{

connect_timeout 3

nb_get_retry 3

delay_before_retry 3

connect_port 80

}

}

real_server 10.0.0.13 80 {

weight 1

TCP_CHECK{

connect_timeout 3

nb_get_retry 3

delay_before_retry 3

connect_port 80

}

}

}

Nginx-静态资源+负载均衡

# Nginx.conf

[root@ansible/data/nginx]$ cat nginx.conf

upstream web01 {

server 10.0.0.12:80; ##配置负载均衡服务器组

server 10.0.0.13:80;

}

upstream web02 {

server 10.0.0.14:80;

server 10.0.0.15:80;

server 10.0.0.16:80;

server 10.0.0.17:80;

}

server {

listen 80;

listen [::]:80;

server_name _;

root /usr/share/nginx/html;

# Load configuration files for the default server block.

include /etc/nginx/default.d/*.conf;

error_page 404 /404.html;

location = /404.html {

}

error_page 500 502 503 504 /50x.html;

location = /50x.html {

}

location / {

proxy_pass http://web01;

index index.html index.htm;

}

location ~ \.jsp$ {

proxy_pass http://web02;

index index.jsp;

}

}

Tomcat+动态资源

[root@ansible/data/tomcat]$ cat tomcat.yml

- hosts: tomcat

remote_user: root

gather_facts: no

tasks:

- name: tar jdk

unarchive:

src: /data/tomcat/jdk-8u341-linux-x64.tar.gz

dest: /usr/local/src/

- name: copy jdk.sh

copy:

src: /data/tomcat/jdk.sh

dest: /etc/profile.d/

- name: source jdk.sh

shell: source /etc/profile.d/jdk.sh

- name: tar tomcat

unarchive:

src: /data/tomcat/apache-tomcat-9.0.54.tar.gz

dest: /usr/local/

- name: copy web.xml

copy:

src: /data/tomcat/apache-tomcat-9.0.54/conf/web.xml

dest: /usr/local/apache-tomcat-9.0.54/conf/web.xml

notify:

- Restart Tomcat

- name: copy contest.xml

src: /data/tomcat/apache-tomcat-9.0.54/conf/context.xml

dest: /usr/local/apache-tomcat-9.0.54/conf/context.xml

notify:

- Restart Tomcat

- name: service tomcat

copy:

src: /data/tomcat/service.sh

dest: /etc/init.d/tomcat.service

mode: 0755

- name: start tomcat

service:

name: tomcat

state: started

handlers:

- name: Restart Tomcat

service:

name: tomcat

state: restarted

[root@ansible/data/tomcat]$ cat context.xml

<Context>

<!-- Default set of monitored resources. If one of these changes, the -->

<!-- web application will be reloaded. -->

<WatchedResource>WEB-INF/web.xml</WatchedResource>

<WatchedResource>${catalina.base}/conf/web.xml</WatchedResource>

<!-- Uncomment this to disable session persistence across Tomcat restarts -->

<!--

<Manager pathname="" />

-->

###添加如下代码##

<Valve className="com.s.tomcat.redissessions.RedisSessionHandlerValve" />

<Manager className="com.s.tomcat.redissessions.RedisSessionManager"

host="10.0.0.18"

port="6379"

database="0"

password="123456"

maxInactiveInterval="60" />

</Context>

[root@ansible/data/tomcat]$ cat service.sh

#!/bin/bash

# description: Tomcat8 Start Stop Restart

# processname: tomcat8

# chkconfig: 2345 20 80

CATALINA_HOME=/usr/local/apache-tomcat-9.0.54

case $1 in

start)

sh $CATALINA_HOME/bin/startup.sh

;;

stop)

sh $CATALINA_HOME/bin/shutdown.sh

;;

restart)

sh $CATALINA_HOME/bin/shutdown.sh

sleep 2

sh $CATALINA_HOME/bin/startup.sh

;;

*)

echo 'please use : tomcat {start | stop | restart}'

;;

esac

exit 0

[root@ansible/data/tomcat]$ cat jdk.sh

JAVA_HOME=/usr/local/src/jdk1.8.0_341

JAVA_BIN=$JAVA_HOME/bin

JRE_HOME=$JAVA_HOME/jre

JRE_BIN=$JRE_HOME/bin

PATH=$JAVA_BIN:$JRE_BIN:$PATH

CLASSPATH=.:$JAVA_HOME/lib/dt.jar:$JAVA_HOME/lib/tools.jar:$JRE_HOME/lib

export JAVA_HOME JRE_HOME PATH CLASSPATH

Redis

[root@ansible/data/redis]$ cat redis.yml

- hosts: redis

remote_user: root

gather_facts: no

tasks:

- name: yum gcc unzip

yum: name=gcc,unzip state=present

- name: tar redis

unarchive:

src: /data/redis/redis-4.0.9.tar.gz

dest: /usr/local/src/

- name: make

shell: cd /usr/local/src/redis-4.0.9 && make MALLOC=libc && make install

- name: copy redis.conf

copy:

src: /data/redis/redis.conf

dest: /usr/local/redis/redis.conf

- name: unzip redis

shell: unzip /usr/local/src/tomcat8_redis_session.zip

- name: tomcat-redis

shell: cd /usr/local/redis/ && cp -a commons-pool2-2.2.jar jedis-2.5.2.jar tomcat8.5-redis-session-manager.jar /usr/local/apache-tomcat-9.0.54/lib/

- name: start redis

shell: /usr/local/redis-4.0.9/src/redis-server /usr/local/redis-4.0.9/redis.conf

[root@ansible/data/redis]$ cat redis.conf

bind 192.168.1.110 #绑定IP

protected-mode yes

port 6379 #端口

daemonize yes

requirepass 123456 #认证密码

MySQL数据库

[root@ansible/data/mysql]$ cat mysql.yml

---

- hosts: mysql

remote_user: root

tasks:

- name: install mysql

yum: name=mysql-server state=present

when: ansible_distribution_major_version == "6"

- name: install mariadb

yum: name=mariadb-server state=present

when: ansible_distribution_major_version == "7"

ELK 架构

# Elasticsearch

- hosts: elasticsearch

remote_user: root

become: yes

tasks:

- name: Install Elasticsearch

yum:

name: elasticsearch

state: present

- name: Start Elasticsearch service

service:

name: elasticsearch

state: started

# logstash

- hosts: logstash

remote_user: root

become: yes

- name: Install Logstash

yum:

name: logstash

state: present

- name: Start Logstash service

service:

name: logstash

state: started

# kibana

- hosts: kibana

remote_user: root

become: yes

- name: Install Kibana

yum:

name: kibana

state: present

- name: Start Kibana service

service:

name: kibana

state: started

# yum.repo

[elasticsearch]

name=Elasticsearch Repository

baseurl=https://artifacts.elastic.co/packages/oss-7.x/yum

gpgcheck=1

gpgkey=https://artifacts.elastic.co/GPG-KEY-elasticsearch

enabled=1

autorefresh=1

type=rpm-md

[logstash]

name=Logstash Repository

baseurl=https://artifacts.elastic.co/packages/oss-7.x/yum

gpgcheck=1

gpgkey=https://artifacts.elastic.co/GPG-KEY-elasticsearch

enabled=1

autorefresh=1

type=rpm-md

[kibana]

name=Kibana Repository

baseurl=https://artifacts.elastic.co/packages/oss-7.x/yum

gpgcheck=1

gpgkey=https://artifacts.elastic.co/GPG-KEY-elasticsearch

enabled=1

autorefresh=1

type=rpm-md